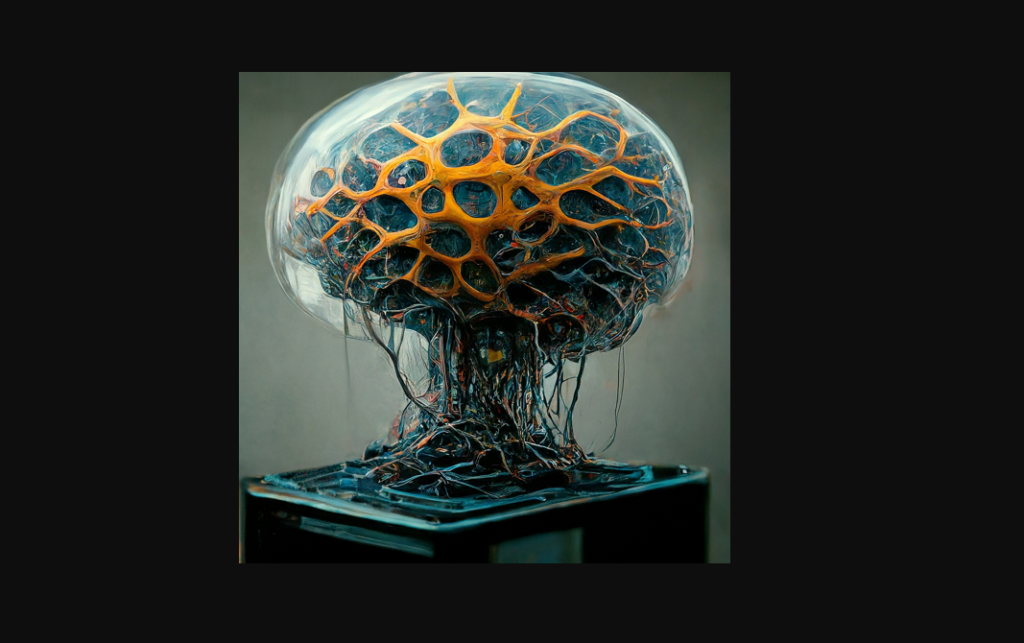

Deep gaining knowledge of is a effective gadget getting to know method that has been revolutionizing numerous fields, from pc vision and natural language processing to healthcare and finance. At the heart of deep getting to know are neural networks, complex mathematical fashions inspired with the aid of the human mind. To educate and set up these neural networks correctly, specialized hardware is crucial. eTAZ systems article will explore the key concerns and challenges in hardware design for deep learning.

Understanding Deep Learning Hardware

Deep gaining knowledge of hardware is particularly designed to boost up the computation-intensive duties worried in education and jogging deep neural networks. These responsibilities consist of matrix multiplication, convolution operations, and nonlinear activations. To take care of these demands successfully, deep learning hardware often consists of specialised hardware architectures and accelerators.

Key Components of Deep Learning Hardware

Central Processing Units (CPUs): While CPUs are general-cause processors, they can be used for deep getting to know duties, specially for smaller models and prototyping. However, their overall performance may be restricted for larger models and worrying programs.

Graphics Processing Units (GPUs): GPUs had been to begin with designed for rendering photographs, however their parallel processing abilties cause them to properly-ideal for deep learning. They can deal with huge matrix operations efficiently and are frequently used for schooling deep neural networks.

Tensor Processing Units (TPUs): TPUs are software-particular included circuits (ASICs) evolved with the aid of Google particularly for deep mastering. They are optimized for performing matrix operations and other computations typically discovered in deep neural networks, imparting wonderful performance and strength performance.

Field-Programmable Gate Arrays (FPGAs): FPGAs offer a bendy technique to hardware design, allowing builders to customize the hardware architecture to specific deep learning packages. They may be reconfigured to put in force distinct neural community architectures and algorithms.

Hardware Design Considerations

When designing hardware for deep gaining knowledge of, several key factors should be considered:

Performance: The hardware must be able to managing the computational demands of deep getting to know algorithms, along with huge datasets and complex fashions.

Memory: Sufficient memory is needed to shop the neural network parameters, enter statistics, and intermediate effects.

Energy Efficiency: Deep mastering fashions may be computationally in depth, so power performance is important, specifically for cellular and embedded devices.

Scalability: The hardware should be scalable to deal with large fashions and datasets as deep learning packages evolve.

Cost: The cost of hardware is a good sized element, specially for massive-scale deployments.

Challenges in Hardware Design

Designing hardware for deep mastering presents several challenges:

Heterogeneous Computing: Deep mastering regularly includes combining different hardware components, including CPUs, GPUs, and TPUs, to optimize overall performance and performance. This can introduce complexity and challenges in software program and hardware integration.

Memory Bandwidth: Deep mastering algorithms often require massive amounts of data to be transferred between reminiscence and processing gadgets. Insufficient reminiscence bandwidth can bottleneck performance.

Power Consumption: High-performance deep getting to know hardware can eat full-size energy, particularly whilst schooling big models. This can be a undertaking for cell and embedded programs.

Software Compatibility: Deep mastering frameworks and libraries ought to be like minded with the chosen hardware platform to ensure seamless integration and overall performance.

Conclusion

Hardware design for deep learning is a unexpectedly evolving subject with good sized implications for various industries. By carefully thinking about the key components, layout issues, and demanding situations, companies can select the maximum suitable hardware solutions to accelerate their deep learning projects and pressure innovation.

FAQs

What is the distinction among CPUs, GPUs, and TPUs for deep studying?

CPUs are standard-purpose processors appropriate for smaller fashions and prototyping. GPUs are specialised for parallel processing and are properly-desirable for schooling large fashions. TPUs are designed specifically for deep learning and offer extremely good overall performance and electricity efficiency.

Which hardware is excellent for deep getting to know?

The first-rate hardware for deep gaining knowledge of relies upon at the specific necessities of the software, inclusive of model size, dataset size, and overall performance desires. TPUs are frequently considered the pinnacle desire for large-scale deep gaining knowledge of, but GPUs and CPUs can also be effective in certain situations.

What are the demanding situations in designing hardware for deep gaining knowledge of?

However Challenges encompass heterogeneous computing, reminiscence bandwidth limitations, power consumption, and software program compatibility.

How can hardware layout boost up deep mastering?

By imparting specialised hardware architectures and accelerators, hardware design can significantly boost up the computation-intensive obligations involved in education and strolling deep neural networks.

What are the destiny traits in hardware design for deep getting to know?

Future trends encompass neuromorphic computing, which aims to mimic the shape and characteristic of the human brain, and quantum computing, which has the potential to resolve complicated issues that are intractable for classical computer systems.